51 Ways to Spell the Image Giraffe: The Hidden Politics of Token Languages in Generative AI

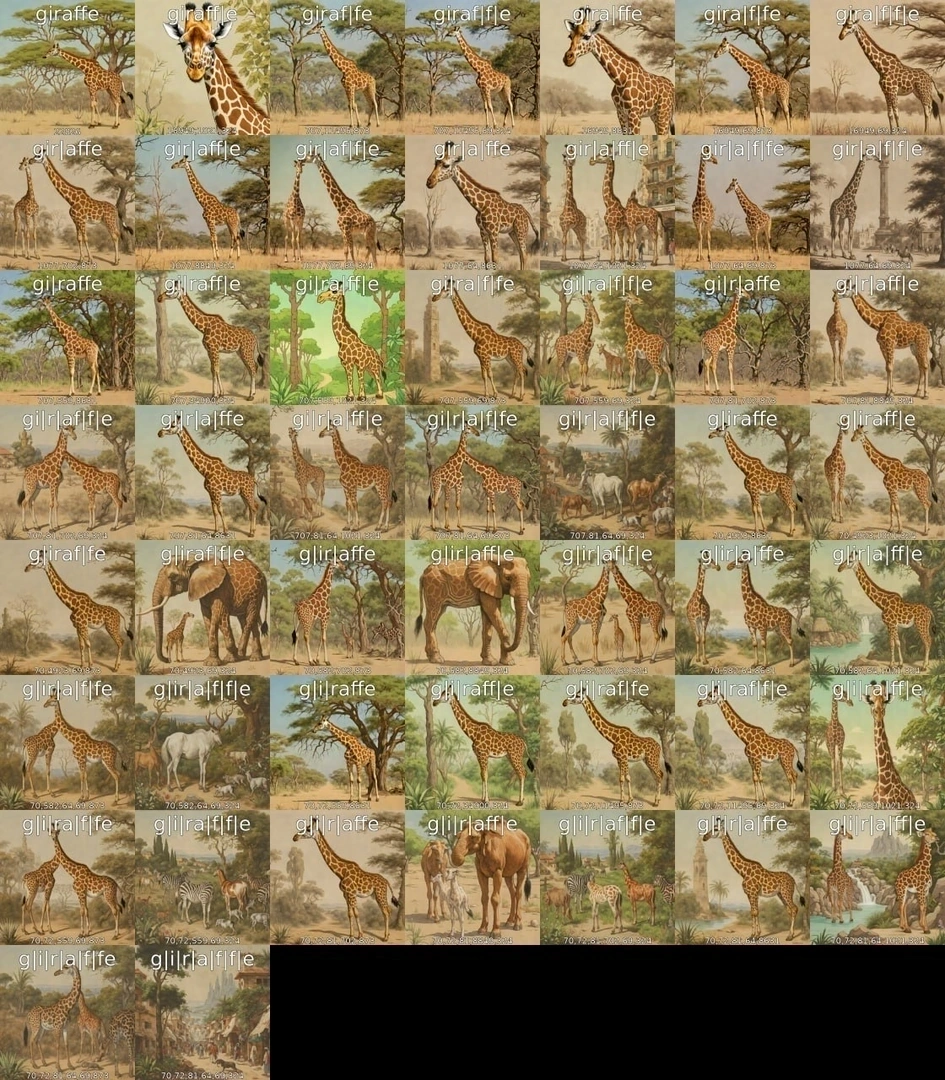

Tokens are the fragments of words that generative models use to process language, the step that breaks text into subword units before any neural networks are involved. There are 51 ways to combine tokens to spell the word giraffe using existing vocabulary: from a single token giraffe to splits using multiple tokens like gi|ra|ffe, gira|f|fe, or even g|i|r|af|fe.

In one experiment, we hijacked the prompting process and fed token combinations directly to text-to-image models. With variations like g|iraffe or gir|affe still generating recognizable results, our experiments show that the beginning and end of tokens hold particular semantic weight in forming giraffe-like images. This reveals that certain images cannot be generated through prompting alone, as the tokenization process sanitizes most combinations, suggesting that English, or any human language, is merely a subset of token languages.

The talk features experiments using genetic algorithms to reverse-engineer prompts from images, respelling words in token language to change their generative outcomes, and critically examining token dictionaries to investigate edge cases where the vocabulary breaks down entirely, producing somewhat speculative languages that include strange words formed at the edge of chaos where English meets token (non-)sense.

These experiments show that even before generation occurs, token dictionaries already encode a stochastic worldview, shaped by the statistical frequencies of their training data – dominated by popular culture, brands, platform-speak, and non-words. Tokenization is, therefore, a political act: it defines what can be represented and how the world becomes computationally representable. We will look at specific tokens and ask: Which models use which vocabularies? What non-word tokens are shared among models? And how do language models make sense of a world using a language we do not understand?

Speakers of this event

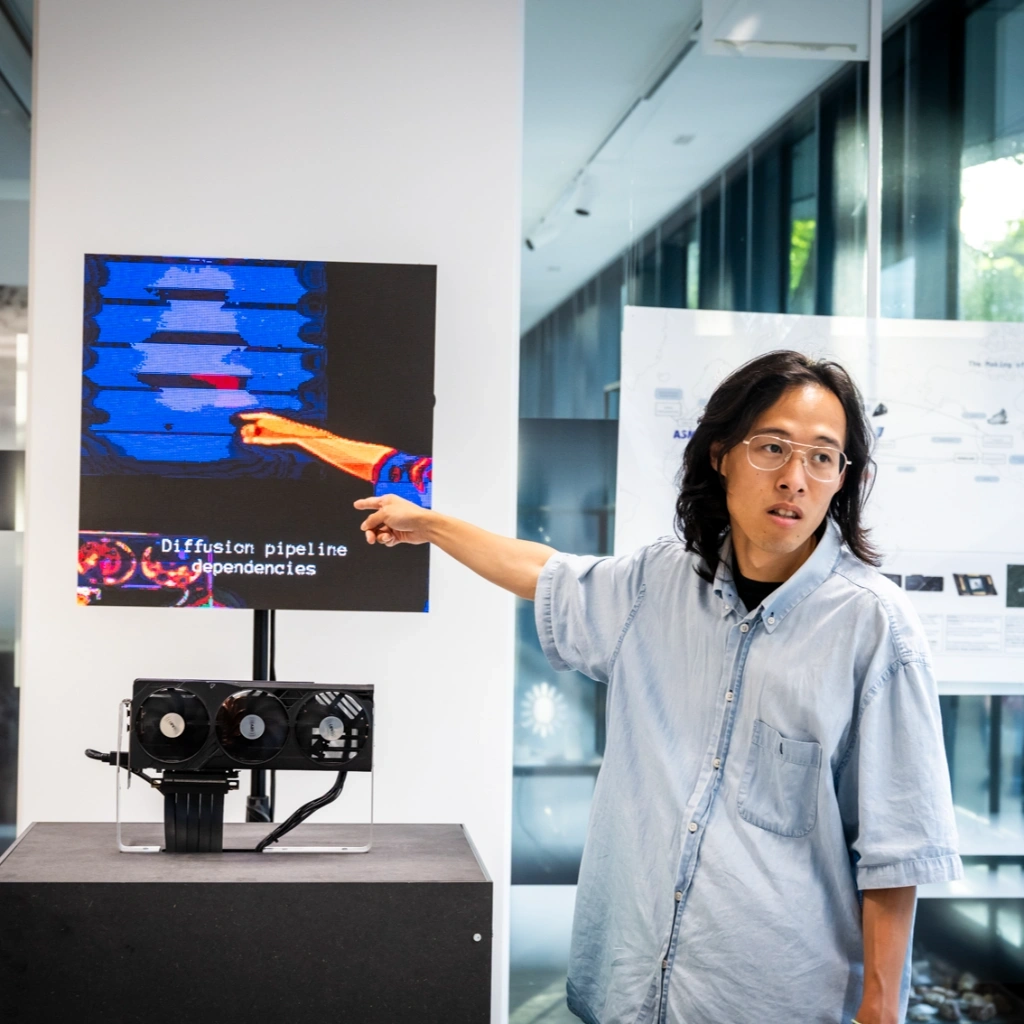

Ting-Chun Liu

Ting-Chun Liu (Taipei, Taiwan) is an artist whose practice and research operate at the intersections of critical artificial intelligence, audiovisual media, and network practice. Through dissecting technological systems with feedback-driven interventions, he examines how perception, aesthetics, and power dynamics propagate through computational processes. He studied at the Academy of Media Arts Cologne, and currently works as an artistic associate and lecturer at the Bauhaus University Weimar.

Leon-Etienne Kühr

Leon-Etienne Kühr works as a computer scientist and media artist with methods of information visualization, data science, and artificial intelligence. In his practice, he investigates the mechanisms of AI-driven automation and the phenomena of the increasingly intertwined relationship between the world and its digital representation in generative AI. He is a research assistant and co-director of the AI Lab at the Offenbach University of Art and Design and previously studied media arts at the KHM Cologne and media informatics at the Bauhaus University Weimar.